Dealing with CORS in JupyterLite

Posted on January 29, 2023Following my previous post, I am intending to see how far I can push JupyterLite as a platform for data analysis in the browser. The convenience of having a full enviroment with a sensible default set of libraries for dealing with data one link away is really something I could use.

But of course, for data analysis you need… well… data. There is certainly no shortage of public datasets on the internet, many of them falling into some sort of Open Data initiatives, such as the EU Open Data.

But, as soon as you try to use JupyterLite to directly fetch data from those sites, you find yourself stumping on a wall named Same Origin Policy.

Same Origin Policy

The Same Origin Policiy is a protection system designed to guarantee that resource providers (hosts) can restrict usage of their data to the pages they host. This is the safe thing to do when there is user data involved, since it prevents third parties to gain access to eg. the user’s cookies and session id’s.

Notice that, when there is no user data involved, it is perfectly safe to relax this policy. In fact, as we will see, it is desirable to do so.

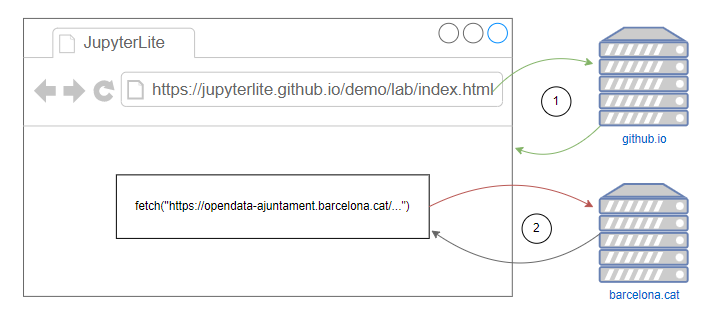

Browsers implement this protection by not allowing a page to perform requests to a server that is different from where it was downloaded unless this other server explicitly allows for it.

This behaviour bites hard at any application involving third party data analysis in the browser, as well as a lot of webassembly “ports” of existing applications with networking capabilities, since the original desktop apps were not designed to deal with this kind of restrictions1 in the first place.

For example, if you are using the Jupyterlite at jupyterlite.github.io, you will not be able to fetch any server beyond github.io that does not allow for it specifically… which many data providers don’t. The request will be blocked by the browser itself (step 2 in the diagram above). You will either need to download yourself the data and upload it to JupyterLite, or self-host JupyterLite and the data in your own server (using it as a proxy for data requests), which kinda takes all the convenience out of it. As an example, evaluating this snippet in JupyterLite works exactly as you would expect:

import pandas as pd

from js import fetch

WORKS = "https://raw.githubusercontent.com/jupyterlite/jupyterlite/main/examples/data/iris.csv"

WORKS_CORS_ENABLED = "https://data.wa.gov/api/views/f6w7-q2d2/rows.csv?accessType=DOWNLOAD"

FAILS_CORS_DISABLED = "https://opendata-ajuntament.barcelona.cat/data/dataset/1121f3e2-bfb1-4dc4-9f39-1c5d1d72cba1/resource/69ae574f-adfc-4660-8f81-73103de169ff/download/2018_menors.csv"

res = await fetch(WORKS)

text = await res.text()

print(text)There are two ways in which a data provider can accept cross-origin requests. The main one (the canonical, modern one) is known as Cross Origin Resource Sharing (CORS). By adding explicit permission in some dedicated HTTP headers, a resource provider can control who can access their data (the world or selected domains) and how (which HTTP methods).

Whenever this is not possible or practical (it needs access to the HTTP server configuration, and some hosting providers may not allow it), there is a second way: the JSONP callback.

The JSONP Callback

The JSONP callback works along these lines:

- The calling page (eg. JupyterLite) defines a callback function, with a data parameter.

- The calling page (JupyterLite) loads a script from the data provider, passing the name of the callback function.

- The data provider script calls back the function with the requested data.

Since the script was downloaded from the data provider’s domain, it can perform requests to that domain, so CORS restrictions do not apply.

This is not the recommended solution because it delegates to the application something that belongs to another layer: both the server and the consuming webpage have to modified. One typical use case is making older browsers work. The other is kind of accidental: downloading from (poorly configured?) Open Data portals. Most Open Data portals (including administrative ones) use pre-built data management systems such as CKAN. These often can handle JSONP by default, while http servers have CORS disabled by default. So keeping the defaults leaves you with JSONP.

Implementing a JSONP helper in JupyterLite

One of the things I love about the browser as a platform is that it is… pretty hackable… just press F12 and you can enter the kitchen. For example, you can see how JupyterLite “fakes” its filesystem on top of IndexedDB, wich is an API for storing persistent data in the browser.

So, we have a way to perform CORS requests and get data from a server implementing JSONP, and we can also fiddle with JupyterLite’s virtual filesystem… would it be possible to write a helper to download datasets into the virtual filesystem? You bet! Just paste the following code in a javascript kernel cell, or use the %%javascript magic in a python one:

window.saveJSONP = async (urlString, file_path, mime_type='text/json', binary=false) => {

const sc = document.createElement('script');

var url = new URL(urlString);

url.searchParams.append('callback', 'window.corsCallBack');

sc.src = url.toString();

window.corsCallBack = async (data) => {

console.log(data);

// Open (or create) the file storage

var open = indexedDB.open('JupyterLite Storage');

// Create the schema

open.onupgradeneeded = function() {

throw Error('Error opening IndexedDB. Should not ever need to upgrade JupyterLite Storage Schema');

};

open.onsuccess = function() {

// Start a new transaction

var db = open.result;

var tx = db.transaction("files", "readwrite");

var store = tx.objectStore("files");

var now = new Date();

var value = {

'name': file_path.split(/[\\/]/).pop(),

'path': file_path,

'format': binary ? 'binary' : 'text',

'created': now.toISOString(),

'last_modified': now.toISOString(),

'content': JSON.stringify(data),

'mimetype': mime_type,

'type': 'file',

'writable': true

};

const countRequest = store.count(file_path);

countRequest.onsuccess = () => {

console.log(countRequest.result);

if(countRequest.result > 0) {

store.put(value, file_path);

} else {

store.add(value, file_path);

}

};

// Close the db when the transaction is done

tx.oncomplete = function() {

db.close();

};

}

}

document.getElementsByTagName('head')[0].appendChild(sc);

}Then, each time you need to download a file, you can just use the following javascript:

%%javascript

var url = 'https://opendata-ajuntament.barcelona.cat/data/es/api/3/action/datastore_search?resource_id=69ae574f-adfc-4660-8f81-73103de169ff'

window.saveJSONP(url, 'data/menors.json')To clarify, you should either use a python kernel with the %%javascript magic or the javascript kernel in both the definition and the call, otherwise they won’t see each other.

Then from a python cell we can read it the standard way:

import json

import pandas as pd

with open('data/menors.json', 'r') as f:

data = json.load(f)

pd.read_json(json.dumps(data['result']['records']))You can find a notebook with the whole code for your convenience in this GIST.

Conclusions

We are just starting to see the potential of WebAssembly based solutions and the browser environment (IndexedDB…). This will increase the demand for data accessibility across origins.

If you are a data provider, please consider enabling CORS to promote the usage of your data. Otherwise you will be banning a growing market of web-based analysis tools from your data.

References

- Simple IndexedDB example

- Sample code for reading and writing files in JupyterLite (this is where the idea for this post comes from).

- On CORS and how to enable it.

- An w3 article on how to open your data by enabling CORS and why it is important, with a list of providers implementing it.

- A test web page to check if a server is CORS enabled.